Data-driven business models are becoming the norm across every sector. But data quality is often poor, with limited information on its source, veracity, or lineage. This can have a real impact on your end-user, who may not have a holistic understanding of how reliable that data is, or if they place trust in it, to make important business decisions or inform regulatory reporting.

Data quality issues also have a huge impact on your data science teams, who often spend more time cleaning data to get it into a usable format than analysing it for actionable insights. That can inflate the cost of any data project and affect your data science team’s motivation to stay with the organisation. As an in-demand and expensive resource, that’s bad news for your business.

Data, data everywhere

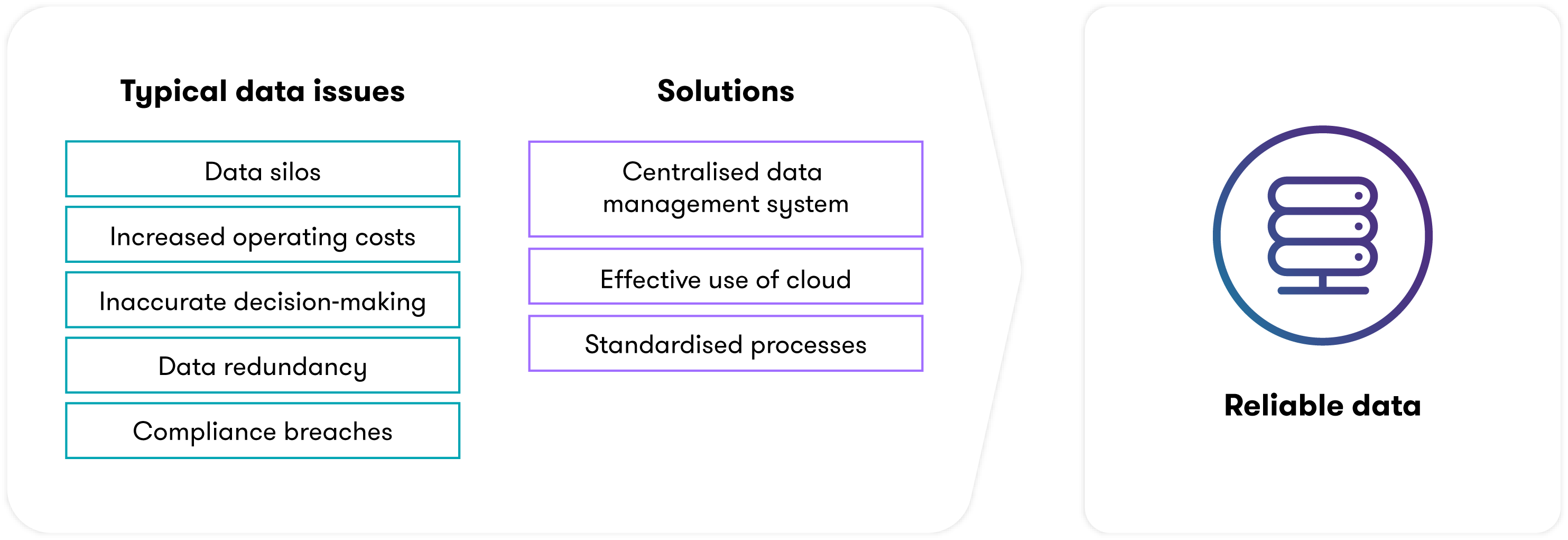

Poor data quality is a real problem – and it’s only going to get worse. The sheer volume of data being collected is growing by the day, and it’s reached a tipping point where manual processes won’t cut it anymore. The potential consequences are manifold:

- Data silos – data becomes fragmented leading to inconsistencies and multiple versions of the truth

- Increased operating costs – it can be expensive to continually correct data and reconcile inconsistencies

- Inaccurate decision-making – when poor quality data inform decision-making processes, it can cause strategic, financial and reputational damage

- Data redundancy – if your people don’t trust the data, they’ll stop using it to drive business decisions, minimising its potential value while retaining the cost of data collection, storage and management

- Compliance breaches – poor data quality can affect financial reports, and regulatory returns and breach the General Data Protection Regulation (GDPR)

You need an easy-to-use solution to help you maintain data quality, track original data sources and find any discrepancies in real-time. That will help you identify any data issues as they happen and prevent errors from being carried downstream. It will also take the pressure off your data scientists when they are ready for data analysis.

Establishing an enterprise-wide data quality solution

You can solve many typical data issues through a centralised, enterprise-wide data management system that keeps all your data in one place. Consolidating your data will help you create a single, 'golden source of truth', accessible by all teams across the business. This immediately reduces the potential for many spreadsheets, held by different teams, with conflicting information and inevitable version control issues.

A centralised system also makes it easier to apply machine learning and artificial intelligence techniques to identify inconsistencies. You can also use these tools to create an interactive dashboard, using PowerBI or similar, to give an overview of your data quality for a specific dataset or to take a closer look at a specific record set for greater insights.

Ultimately, this presents a real-time overview of your data quality, helping you address any issues sooner and prevent errors from being carried downstream. This ongoing approach will also reduce the time spent cleaning data, letting your data scientists focus on what they do best: generating actionable insights.

Using cloud computing effectively

To support an enterprise-wide data management system, you need to think about what kind of data you collect. Data comes in all shapes and sizes, and different types have different quality requirements. For example, you can assess streaming data in real-time, but you need to review transactional data in batches, often at the end of every day or a set financial period. That creates peaks and troughs in terms of computational power and storage requirements.

Many firms still use in-house capabilities to store and assess this data, but it’s not the most cost-effective approach. Good use of cloud or computation-as-a-service models can offer affordable and scalable options to manage that demand.

Standardising your data processes

Standardising data processes across the business will help you effectively embed an enterprise-wide data management tool. In many organisations, each team follows its own data process with different rules on how to draw from, interact with and feed into your data ecosystem. As businesses expand that problem only gets worse as different teams, office and branches all do their own thing. By the time you get to international offices, data processes can vary hugely, with a knock-on effect on quality.

It’s essential to embed a standardised set of data quality policies that can be used by every team across the business, regardless of geographic location or job function. These must be supported with effective governance and oversight processes to make sure data policies are being put into action, with key individuals holding ultimate responsibility and accountability. Many teams may need further training to make sure they realise data quality expectations and their role to play.

Where to start with data quality tools

Setting up an enterprise-wide data quality solution may seem expensive in the short term, but it will save on cost in the long term – by restoring trust in your data and helping your team draw more effective insights. There are a number of ways to get started:

- Assess and understand your needs, including business use cases, IT infrastructure and data characteristics (such as volume, variety, veracity and velocity)

- Review potential vendors to find an enterprise-wide data quality solution that meets your needs and budget

- Set up a team to start standardising your data processes and create enterprise-wide data policies

- Establish key responsibilities and accountable individuals to improve governance and oversight

- Deliver training to all teams on how to maintain data quality and interact with the enterprise-wide data management system

You may already be using data tools such as Azure, GCP or AWS (to name a few), which have additional modules to help you improve your data quality. These could be good options in the short term, while you establish a cohesive enterprise-wide data quality solution.

Ultimately, improving your data quality will support your data scientists to focus more on drawing reliable, actionable insights for senior management. This builds trust and in turn, empowers your senior leadership team to make confident, bold decisions about the future of your business.

![]()